Why Does AI Programming Start Strong but Become Harder to Maintain?

Oct 5, 2025 · 1651 words

Originally published onWeChat Official Account: FUTURE CODER 未来开发者,View original。

Nowadays, the barrier to AI programming seems to be getting lower and lower. Since the emergence of terms like "Vibe Coding," a beautiful scenario has been painted: you just need to say a single sentence, and a stunning interface or a sophisticated product feature appears instantly. Full of anticipation, you dive into practice, attempting Vibe Coding by letting the AI write the code entirely.

However, while the ideal is full of promise, reality is often harsh. Initially, for a small project starting from scratch or for simple features, AI can indeed deliver a perfect score. But as the project progresses, this wonderful experience begins to fade. You find that the AI starts to have frequent misunderstandings, the code it writes becomes increasingly error-prone, and debugging time grows longer and longer.

Why does this happen? Is AI programming destined only for building small toys? Is it doomed to never write maintainable, useful code?

If you have been troubled by these questions, this article will clear your doubts. These issues in AI programming are actually determined by the inherent characteristics of Large Language Models (LLMs). This article will introduce an effective method to ensure the maintainability of AI-generated code.

The Essence of the Maintainability Problem in AI Programming

The "smooth start, difficult sequel" problem in AI programming is actually a classic issue in software engineering: maintainability. By definition, maintainability refers to the characteristic of code that allows it to be easily modified, extended, and repaired during later development and maintenance.

Long before the advent of AI, software maintainability was a frequently discussed topic. Unmaintainable code is often the result of messy structures and complex, incomprehensible logic, making it incredibly difficult to add new features later on.

The larger the project scale and the more complex the functions, the harder it is to maintain. It's like a game of building blocks. The higher you want to build, the more stable the base blocks need to be. If the foundation is weak, you might barely manage to reach 10 layers, but by the time you reach 30, it might collapse entirely.

AI programming amplifies the problem of project maintainability. If most of your project's code is written by AI, you will find that the blocks collapse very quickly. If you ask it to build 10 layers, its goal is simply to build 10 layers that don't fall down right now; as for whether it will collapse when you try to add an 11th layer, who cares?

This is the blind spot of AI thinking: it only completes tasks in the most direct way possible.

AI is like an irresponsible intern who only sees the immediate task goal; concerns like maintainability are not within its scope of consideration. (This also means you should never communicate with AI using purely "human" assumptions. For a detailed explanation on this, see: Why is AI programming performance poor? Because you are communicating with AI as if it were a human)

This inherent trait of AI makes code maintainability a difficult challenge. So, how can we constrain AI to make it write stable, scalable code?

The Shortcut: Establishing Programming Standards for AI

This is the "basic version" method I recommend. If you want to quickly upgrade the maintainability of the code AI writes, this is the fastest and simplest solution.

The logic is simple: Since AI only follows explicitly given instructions, we just need to write the programming standards and maintainability processes directly into the instructions.

Most of the tips and tricks you see on various forums and social media are based on this logic. The essence is to take human-summarized project standards and turn them into Prompts for the AI to follow. For example:

When Cursor became popular, there was a famous "RIPER-5 Mode" that allowed Cursor to handle complex projects. The RIPER-5 mode defines 5 stages in programming: Research, Innovate, Plan, Execute, and Review, while specifying behavioral norms and switching rules for each, ensuring Cursor's behavior is strictly traceable.

When Claude Code gained popularity, someone turned the father of Linux into a Prompt. This is a CLAUDE.md file with "Role Definitions," "Core Philosophy," and "Communication Principles," giving Claude Code the structured thinking style of Linus Torvalds. For an explanation of this Prompt, see my previous article: Why putting the father of Linux into Claude Code works so well? Talking about the underlying logic of AI programming

Kiro, a newly released AI programming tool, even integrates a software development workflow, including requirement documentation, coding, and test verification. Such a process constrains AI behavior and avoids the uncertainty brought by AI's "creative" liberties. (If you are interested in how Kiro works, feel free to leave a comment, and I will consider writing a breakdown of Kiro's process.)

Therefore, if you want to quickly improve the maintainability of AI programming, directly applying these ready-made programming standards is the most efficient way.

The Advanced Method: Letting AI Think Deeply About Key Decisions

Using ready-made programming standards can improve AI programming maintainability from, say, a 20 to a 40, but continuing to improve beyond that becomes difficult.

This is because while these standards guide AI to follow general behavioral norms, they cannot solve specific problems within your project. This is the part that "universal Prompts" advertised online won't tell you.

Consider this scenario:

Your project needs to implement a key feature, and you can choose between Option A (simple implementation, clear structure) and Option B (complex architecture, easy to scale). This is a common choice in many projects and has a significant impact on future maintainability.

You might ask: for this situation, can't I just give an instruction like "Please choose the most suitable option"?

Not exactly. You might think that when faced with "Option A vs. Option B," the AI will act like a human programmer—first researching the pros and cons of both and then choosing based on the project's context.

In reality, the AI will still complete the task in the most direct way. This "directness" manifests as follows: even if your instructions lack critical background information needed to judge which option is better, the AI won't stop to ask you. Instead, it will guess the most "suitable" option based on the context it has.

This is the behavioral pattern of AI: it won't admit "I don't know." Instead, it will try its best to provide the answer with the highest probability of being correct, even if that answer is wildly wrong.

This is the bottleneck for maintainability in many projects: often, the AI simply cannot choose the truly appropriate solution. For this situation, I want to recommend an advanced method that forces the AI to truly think carefully about the plan.

Here is a process I have been using lately that is effective for complex decisions:

- Describe my requirements and have the AI draft a plan in a document.

- Clear the context (start a new chat), discuss and review the plan generated in the previous step, focusing on: whether this plan fits the current project background and stage.

- If the plan is fine, proceed with implementation; otherwise, discard or revise the original plan and start over.

To circumvent the AI's thinking blind spots, I save the AI's plan first and then force it to scrutinize its own plan, giving it the opportunity to say "I don't know" or "I'm missing some key information."

The key to this process is: when reviewing the plan, you must start a new chat and clear the context. If you continue the discussion in the original chat, the AI will be influenced by historical information and will likely conclude that the original plan was perfectly reasonable rather than reflecting on it critically.

This is actually a classic example of "context conflict" in AI Agents. If the AI drafts a plan and you point out problems in the same conversation (context) to ask for reflection, the information that "the plan is right" and "the plan has problems" exists in the context simultaneously. This confuses the AI's reasoning and degrades the quality of the output. If you are interested in the principles behind this, let me know, and I will write an article about it in the future.

For fundamental and critical features in a project, you can use this method to let the AI think deeply about key decisions, taking your project's maintainability to the next level.

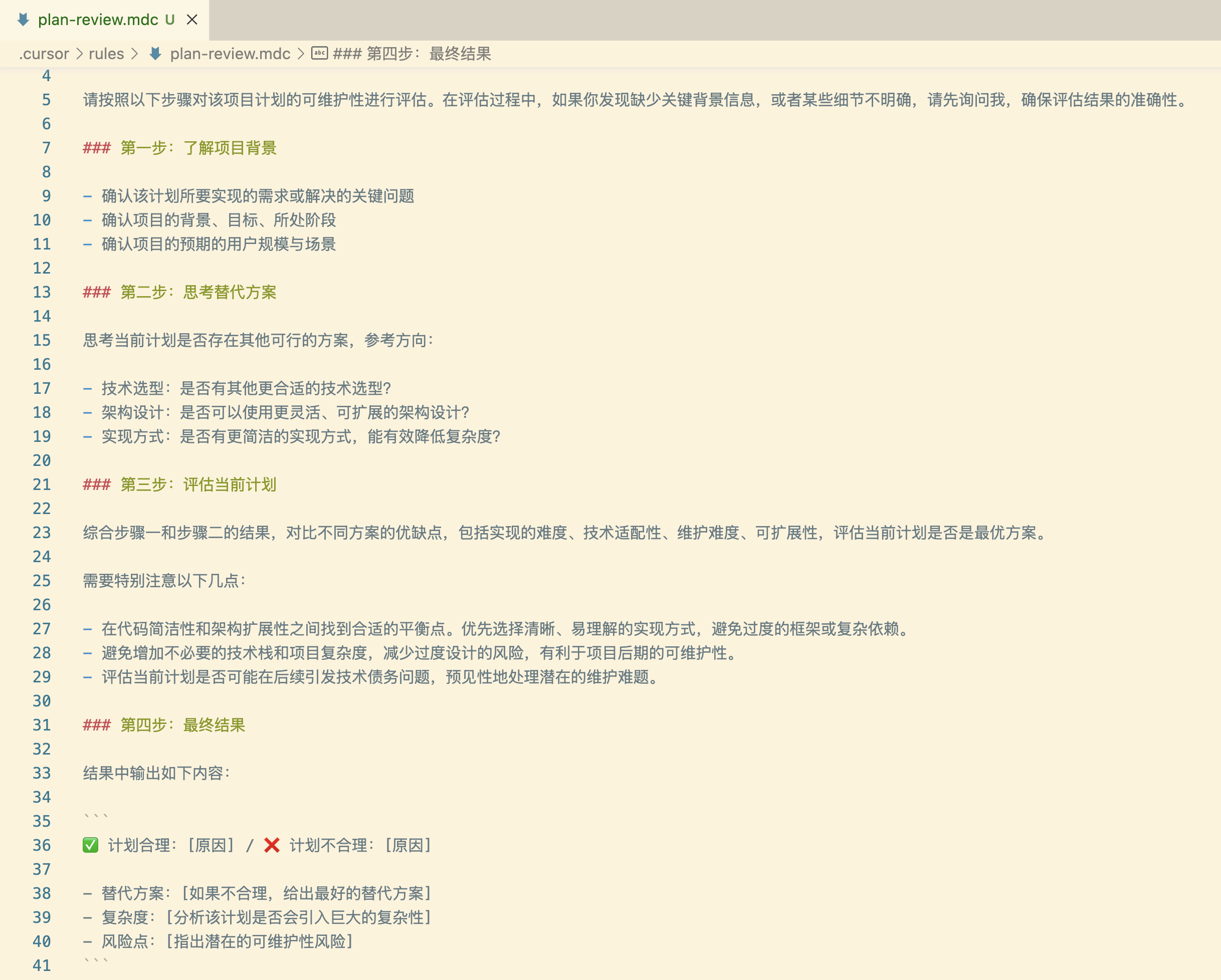

Here is a specific Prompt for your reference:

Original Prompt link: https://github.com/nettee/ai-collection/blob/master/content/prompts/plan-review.md

Summary

By now, you likely understand why AI programming often falls into the trap of "stunning start, struggling finish." The core issue is the lack of project maintainability. AI is like a goal-driven but short-sighted intern who completes tasks in the most direct way without actively considering future scalability or stability.

To solve this problem, you have two choices:

- The Shortcut: Specify explicit programming standards for the AI. This quickly constrains AI behavior and raises code quality to a basic usable level.

- The Advanced Method: Force the AI to reflect deeply on its own plans by clearing the context. This allows for better decision-making tailored to the project, improving long-term maintainability.

This also tells us that AI programming is by no means simple "Vibe Coding." It requires us to constantly act as gatekeepers at critical junctures. By continuously constraining AI behavior, we ensure that AI doesn't just write code that "barely works," but builds a project that can continue to evolve.

I hope this article helps you better master AI programming—allowing you to go both fast and far, completing AI programming projects with higher stability and quality.